Load balancing is a crucial component in backend systems and plays a significant role in ensuring reliability, availability, and scalability of applications. Especially in today’s digital landscape, where online services are expected to be fast and reliable, load balancing is essential for distributing incoming traffic efficiently across multiple servers to prevent any one server from becoming overwhelmed. This post will delve into the fundamentals of load balancing, the types of load balancing algorithms, the technology behind it, and how it enhances backend systems.

What is Load Balancing?

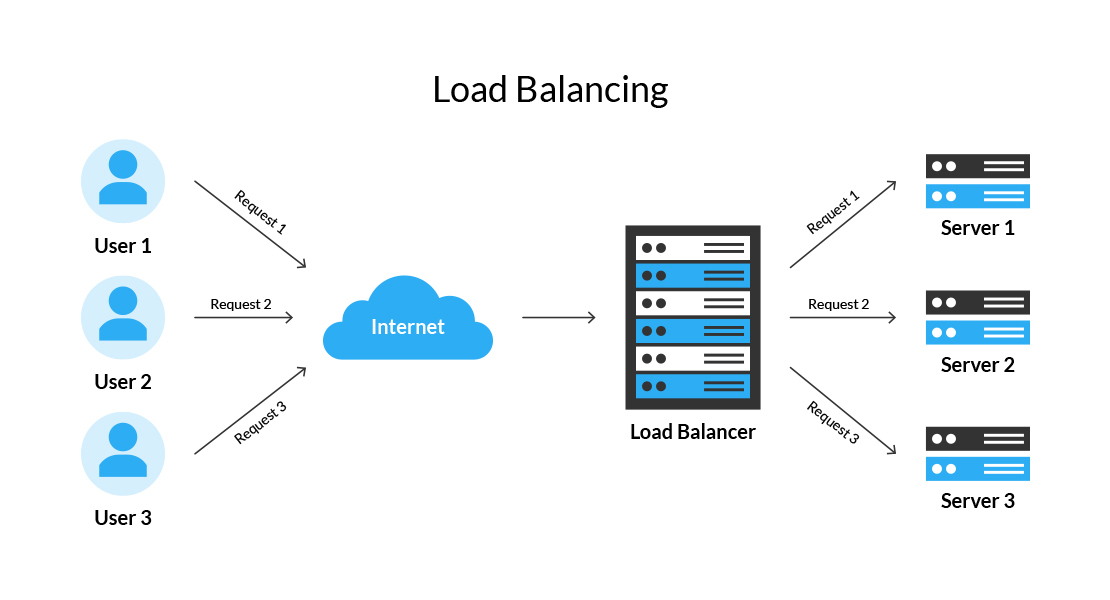

Load balancing is the process of distributing incoming network traffic across multiple servers, which are collectively known as a server farm or a server pool. In backend systems, load balancers work to maintain a smooth flow of data requests by ensuring that no single server is overburdened. This is especially important in environments where there are fluctuating traffic patterns, such as e-commerce platforms during sale periods, streaming services during peak hours, or any web application experiencing high volumes of requests.

Why Load Balancing is Essential

The main objectives of load balancing are:

- Improved Performance: By spreading requests across multiple servers, applications can handle higher volumes of traffic without sacrificing performance.

- Fault Tolerance and Redundancy: If one server fails, the load balancer reroutes traffic to other available servers, ensuring continuous service.

- Scalability: As an application grows, additional servers can be added to the pool, and the load balancer can adjust accordingly.

- Reduced Latency: Traffic is directed to the closest or least busy server, reducing wait times and improving response times for users.

How Load Balancing Works

At its core, a load balancer acts as a reverse proxy, receiving incoming traffic and forwarding it to one of several backend servers. A load balancer can be deployed either as a hardware device, software solution, or cloud-based service. It analyzes each incoming request and directs it to the optimal server based on the current load, network conditions, and specific algorithms or rules.

Here’s a simplified process flow for load balancing in backend systems:

- Request Reception: The load balancer receives a request from a client (e.g., a user accessing a website).

- Decision Making: Based on the chosen algorithm, the load balancer selects the best server to handle the request.

- Forwarding: The load balancer forwards the request to the selected server.

- Response: Once the server processes the request, it sends the response back to the client, often routed through the load balancer.

Types of Load Balancers

Load balancers can be categorized based on the layer of the OSI model they operate on. The two main types are:

Layer 4 Load Balancers: Operate at the transport layer (OSI Layer 4), handling traffic based on IP addresses and TCP/UDP ports. They make routing decisions without looking at the actual content of the message. They’re fast and efficient, making them ideal for applications with simple routing requirements.

Layer 7 Load Balancers: Operate at the application layer (OSI Layer 7), allowing them to make more complex routing decisions based on the content of the request, such as URLs, cookies, or HTTP headers. This is useful for more sophisticated backend systems that require intelligent request handling, such as directing users to different servers based on their location or device.

Load Balancing Algorithms

Load balancers use various algorithms to determine how to distribute traffic across servers. Some of the most common algorithms are:

1. Round Robin

In a round-robin configuration, requests are sent to servers sequentially. The load balancer distributes each new request to the next server in the pool in a circular manner. Once it reaches the last server, it loops back to the first server and starts again. This is a simple but effective method, particularly when all servers have roughly the same capacity and performance.

2. Least Connections

The least connections algorithm directs traffic to the server with the fewest active connections. This method ensures that the server with the lightest load receives the next request, making it suitable for applications with long-lived connections or inconsistent workloads.

3. Least Response Time

This method routes traffic to the server with the lowest response time and active connections. It is ideal for latency-sensitive applications since it reduces the time it takes for a client to receive a response.

4. IP Hash

With IP hash, the load balancer uses the client’s IP address to determine which server to send the request to. This ensures that each client is routed to the same server, making it effective for applications that require session persistence.

5. Weighted Round Robin

Similar to round robin, but each server is assigned a weight based on its capacity. Servers with higher weights receive more requests. This is useful when there are servers with varying levels of power, memory, or storage in the pool.

6. Weighted Least Connections

Similar to the least connections method, but each server has an assigned weight. This method favors servers with higher capacities but still takes into account the number of connections.

Load Balancing in the Cloud

Many modern applications are hosted in the cloud, where load balancing can be done at the infrastructure level by cloud providers. Cloud providers like AWS, Google Cloud, and Microsoft Azure offer load-balancing services that can dynamically adjust to changing traffic patterns and scale as needed. Cloud load balancers often come with additional features, such as geographic distribution, integrated health checks, and support for microservices architectures.

Cloud-based load balancing also supports advanced use cases, such as:

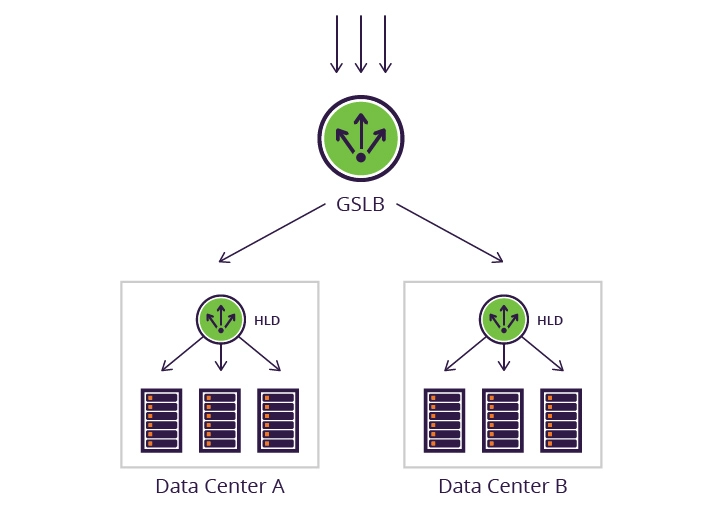

- Global Load Balancing: Distributing traffic across multiple data centers worldwide to improve latency and redundancy.

- Auto-scaling: Automatically adding or removing servers in response to traffic demands.

- Security: Many cloud load balancers come with built-in security features, like DDoS protection and encryption, providing an additional layer of defense against malicious attacks.

Load Balancing and Microservices

In microservices architectures, where applications are divided into small, independent services, load balancing becomes even more critical. With many services communicating with each other, there’s a need to manage traffic between services efficiently. In these setups, load balancing can happen at multiple levels:

- API Gateway Load Balancing: The API gateway acts as a load balancer for requests coming into the application from external clients.

- Service Mesh Load Balancing: A service mesh handles traffic within the microservices ecosystem, often incorporating features like service discovery and monitoring.

- Client-Side Load Balancing: In some cases, the client (e.g., a microservice) decides which instance of another service to send a request to, reducing dependency on a central load balancer.

Challenges in Load Balancing

While load balancing offers many benefits, it also comes with its own set of challenges:

- Session Persistence: Some applications require users to stay connected to the same server during a session. This can be challenging for load balancers and requires techniques like sticky sessions or storing session data in a shared database.

- SSL Termination: Load balancers often need to handle SSL/TLS encryption, which adds complexity and processing overhead. SSL termination offloads this process from the backend servers, but it requires careful security management.

- Latency and Bottlenecks: If the load balancer itself becomes a bottleneck, it can slow down the application. High-availability configurations with multiple load balancers can alleviate this issue.

- Configuration Complexity: Managing a distributed system with load balancing across multiple levels requires significant configuration, monitoring, and tuning.

Conclusion

Load balancing is a fundamental aspect of modern backend systems, providing performance improvements, fault tolerance, and scalability. By distributing traffic across multiple servers using algorithms like round robin, least connections, or IP hash, load balancers help maintain a reliable and responsive user experience. Whether used in traditional server clusters or modern microservices architectures, load balancing enables systems to handle high volumes of traffic efficiently, providing a better experience for users and increasing the robustness of backend systems. As demand grows, so too does the importance of load balancing in delivering scalable and highly available applications.